Running AI models locally on your computer has become increasingly popular, offering benefits like enhanced privacy, offline access, and customization. DeepSeek, a powerful AI framework or model (depending on its specific implementation), is no exception. This guide will walk you through the process of setting up and running DeepSeek on your local machine while adhering to best practices for performance, security, and ethical use.

Understanding DeepSeek and Its Requirements

Before diving into the technical steps, let’s clarify what DeepSeek is and why you might want to run it locally. DeepSeek is an AI model or framework designed for tasks like natural language processing, data analysis, or generative AI. Running it locally means you can leverage its capabilities without relying on cloud-based services, giving you full control over data and operations.

Why Run DeepSeek Locally?

- Privacy: Sensitive data remains on your device.

- Offline Access: No internet connection required after setup.

- Customization: Fine-tune the model for specific use cases.

- Cost Efficiency: Avoid cloud service fees.

Prerequisites

To run DeepSeek smoothly, ensure your system meets these requirements:

- Hardware:

- CPU: A modern multi-core processor (Intel i5/i7 or AMD Ryzen 5/7).

- RAM: Minimum 16GB (32GB recommended for larger models).

- Storage: At least 20GB of free space (models and dependencies can be large).

- GPU (Optional): NVIDIA GPU with CUDA support (e.g., RTX 3060 or higher) for accelerated performance.

- Software:

- Operating System: Windows 10/11, macOS (Intel/Apple Silicon), or Linux (Ubuntu/Debian).

- Python: Version 3.8 or newer.

- Package Manager:

piporconda. - Git: For cloning repositories.

- CUDA Toolkit (if using an NVIDIA GPU).

- Accounts:

- GitHub: To access DeepSeek’s repository (if open-source).

- Hugging Face (optional): For downloading model weights.

Step 1: Setting Up Your Environment

A clean and isolated environment prevents dependency conflicts. Here’s how to set it up:

Install Python and Git

Download Python from python.org and install it. Check the installation with:

Install Git from git-scm.com. Verify using:image

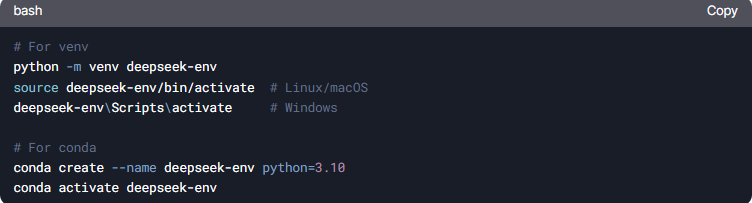

Create a Virtual Environment

Use venv (built into Python) or conda (for advanced users):

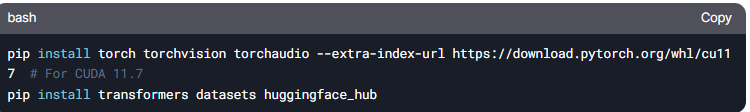

Step 2: Installing Dependencies

DeepSeek relies on libraries like PyTorch, TensorFlow, or Hugging Face’s transformers. Install them using pip:

Note: Adjust the PyTorch command based on your CUDA version (check with

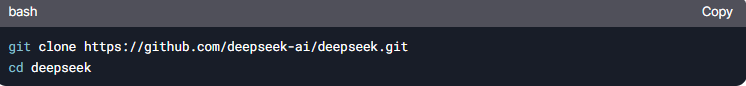

nvidia-smi).Downloading DeepSeek

Clone the official DeepSeek repository (replace with the correct URL if available):

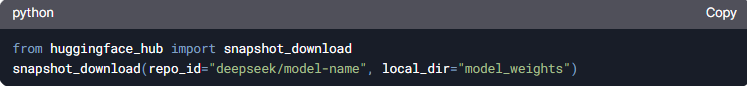

If the model weights are hosted on Hugging Face, use

huggingface_hub to download them:

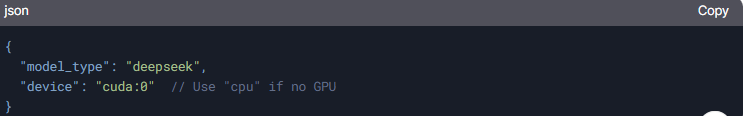

Configuring the Model

Most models require a configuration file (e.g., config.json). Edit this file to match your hardware:

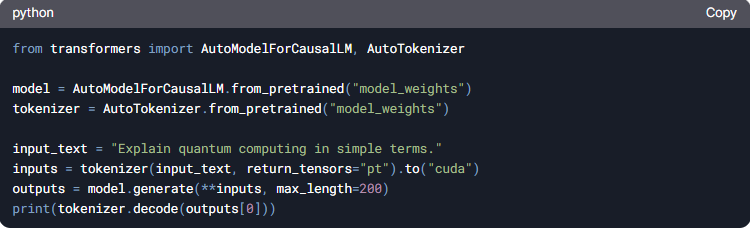

Running DeepSeek

Execute a test script to ensure everything works. Create a demo.py file:

Run the script:

Troubleshooting Common Issues

- Dependency Conflicts: Use

pip freeze > requirements.txtto document versions. - Out-of-Memory Errors: Reduce batch size or use a smaller model.

- CUDA Errors: Reinstall drivers and ensure compatibility with PyTorch.

Best Practices for Local AI Models

- Regular Updates: Pull the latest code and weights.

- Resource Monitoring: Use tools like

htopornvidia-smi. - Ethical Use: Audit outputs for bias or misinformation.

Conclusion

Running DeepSeek locally empowers you to harness AI capabilities securely and efficiently. By following this guide, you’ve set up a robust environment, configured the model, and learned to troubleshoot issues. Always refer to official documentation for updates and stay mindful of ethical considerations.